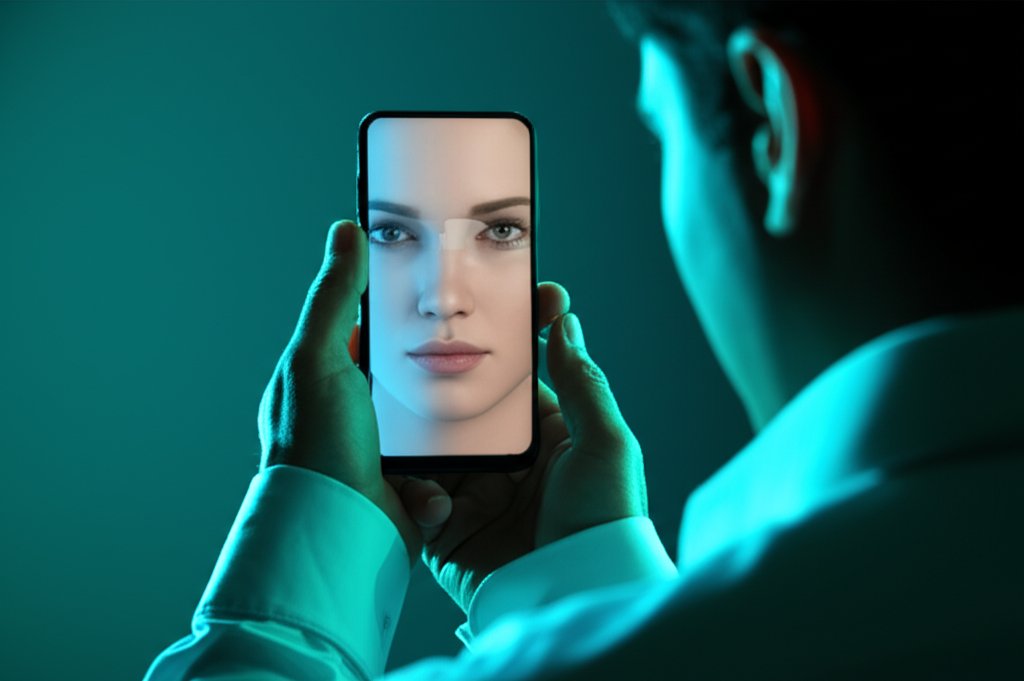

Have you ever received a call that sounded just like your boss, urgently asking for a last-minute wire transfer? Or perhaps a video message from a family member making an unusual, sensitive request? What if I told you that voice, that face, wasn’t actually theirs? That’s the chilling reality of AI-powered deepfakes, and they’re rapidly becoming a serious threat to your personal and business security.

For too long, many of us might have dismissed deepfakes as mere Hollywood special effects or niche internet humor. But as a security professional, I’m here to tell you that this perception is dangerously outdated. Deepfakes are no longer theoretical; they are a real, accessible, and increasingly sophisticated tool in the cybercriminal’s arsenal. They’re not just targeting celebrities or high-profile politicians; they’re coming for everyday internet users and small businesses like yours, making traditional scams devastatingly effective.

In this post, we’re going to pull back the curtain on AI deepfakes. We’ll explore exactly how these convincing fakes can breach your personal and business security, learn how to spot the red flags that betray their synthetic nature, and most importantly, equip you with practical, non-technical strategies to fight back and protect what matters most.

What Exactly Are AI Deepfakes? (And Why Are They So Convincing?)

Let’s start with a foundational understanding. What are we actually talking about when we say “deepfake”?

The “Fake” in Deepfake: A Simple Definition

A deepfake is essentially synthetic media—a video, audio clip, or image—that has been created or drastically altered using artificial intelligence, specifically a branch called “deep learning.” That’s where the “deep” in deepfake comes from. The AI is so advanced that it can make a fabricated piece of content look or sound incredibly real, often mimicking a specific person’s appearance, voice, or mannerisms with alarming accuracy.

A Peek Behind the Curtain: How AI Creates Deepfakes (No Tech Jargon, Promise!)

You don’t need to be a data scientist to grasp the gravity of the threat here. Think of it this way: AI “learns” from a vast amount of real images, videos, and audio of a target person. It meticulously studies their facial expressions, their unique speech patterns, their voice timbre, and even subtle body language. Then, it uses this exhaustive learning to generate entirely new content featuring that person, making them appear to say or do things they never actually did. Because the technology is advancing at an exponential rate, these fakes are becoming increasingly sophisticated and harder to distinguish from reality. It’s a bit like a highly skilled forger, but instead of paint and canvas, they’re using data and algorithms.

How AI-Powered Deepfakes Can Breach Your Personal & Business Security

So, how do these digital imposters actually hurt you? The ways are diverse, insidious, and frankly, quite unsettling.

The Ultimate Phishing Scam: Impersonation for Financial Gain

Deepfakes don’t just elevate traditional phishing scams; they redefine them. Imagine receiving a phone call where an AI-generated voice clone of your CEO urgently directs your finance department to make a last-minute wire transfer to a “new supplier.” Or perhaps a video message from a trusted client asking you to update their payment details to a new account. These aren’t hypothetical scenarios.

- Voice Cloning & Video Impersonation: Cybercriminals are leveraging deepfakes to impersonate high-ranking executives (like a CEO or CFO) or trusted colleagues. Their goal? To trick employees into making urgent, unauthorized money transfers or sharing sensitive financial data. We’ve seen high-profile incidents where companies have lost millions to such scams, and these attacks can easily be scaled down to impact small businesses. For example, a UK energy firm reportedly transferred over £200,000 after its CEO was fooled by a deepfake voice call from someone impersonating their German parent company’s chief executive.

- Fake Invoices/Supplier Requests: A deepfake can add an almost undeniable layer of credibility to fraudulent requests for payments to fake suppliers, making an email or call seem unquestionably legitimate.

- Targeting Individuals: It’s not just businesses at risk. A deepfake voice or video of a loved one could be used to convince an individual’s bank to authorize unauthorized transactions, preying on emotional connection and a manufactured sense of urgency.

Stealing Your Identity: Beyond Passwords

Deepfakes represent a terrifying new frontier in identity theft. They can be used not just to mimic existing identities with frightening accuracy but potentially to create entirely new fake identities that appear legitimate.

- Imagine a deepfake video or audio of you being used to pass online verification checks for new accounts, or to gain access to existing ones.

- They also pose a significant, albeit evolving, threat to biometric authentication methods like face ID or voice ID. While current systems are robust and often include anti-spoofing techniques, the technology is advancing rapidly. Deepfakes could potentially bypass these security measures in the future if not continuously secured and updated against new attack vectors.

Tricking Your Team: Advanced Social Engineering Attacks

Social engineering relies on psychological manipulation, exploiting human vulnerabilities rather than technical ones. Deepfakes make these attacks far more convincing by putting a familiar, trusted face and voice to the deception. This makes it significantly easier for criminals to manipulate individuals into clicking malicious links, downloading malware, or divulging confidential information they would normally never share.

- We’re seeing deepfakes used in “vibe hacking”—sophisticated emotional manipulation designed to get you to lower your guard and comply with unusual requests. They might craft a scenario that makes you feel a specific emotion (fear, empathy, urgency) to bypass your critical thinking and logical defenses.

Damaging Reputations & Spreading Misinformation

Beyond direct financial and data theft, deepfakes can wreak havoc on an individual’s or business’s reputation. They can be used to create utterly false narratives, fabricate compromising situations, or spread highly damaging misinformation, eroding public trust in digital media and in the person or entity being faked. This erosion of trust, both personal and institutional, is a significant and lasting risk for everyone online.

How to Spot a Deepfake: Red Flags to Watch For

While AI detection tools are emerging and improving, your human vigilance remains your most powerful and immediate defense. Cultivating a keen eye and ear is crucial. Here are some key red flags to watch for:

Visual Clues (Eyes, Faces, Movement)

- Eyes: Look for unnatural or jerky eye movements, abnormal blinking patterns (either too little, making the person seem robotic, or too much, appearing erratic). Sometimes, the eyes might not seem to track properly or may lack natural sparkle and reflection.

- Faces: Inconsistencies in lighting, shadows, skin tone, or facial features are common. You might spot patchy skin, blurry edges around the face where it meets the background, or an overall “uncanny valley” effect—where something just feels off about the person’s appearance, even if you can’t pinpoint why.

- Movement: Awkward or stiff body language, unnatural head movements, or a general lack of natural human micro-expressions and gestures can be giveaways. The movement might seem less fluid, almost puppet-like.

- Lip-Syncing: Poor lip-syncing that doesn’t quite match the audio is a classic sign. The words might not align perfectly with the mouth movements, or the mouth shape might be inconsistent with the sounds being made.

Audio Clues (Voices & Sound)

- Voice Quality: The voice might sound flat, monotone, or strangely emotionless, lacking the natural inflections and nuances of human speech. It could have an unnatural cadence, strange pitch shifts, or even a subtle robotic tone that doesn’t quite sound authentic.

- Background Noise: Listen carefully for background noise that doesn’t fit the environment. If your boss is supposedly calling from their busy office, but you hear birds chirping loudly or complete silence, that’s a significant clue.

- Speech Patterns: Unnatural pauses, repetitive phrasing, or a distinct lack of common filler words (like “um,” “uh,” or “like”) can also indicate a synthetic voice.

Behavioral Clues (The “Gut Feeling”)

This is often your first and best line of defense. Trust your instincts, and always verify.

- Unexpected Requests: Any unexpected, unusual, or urgent request, especially one involving money, sensitive information, or a deviation from established procedure, should immediately raise a towering red flag. Cybercriminals thrive on urgency and fear to bypass critical thinking.

- Unfamiliar Channels: Is the request coming through an unfamiliar channel, or does it deviate from your established communication protocols? If your boss always emails about transfers, and suddenly calls with an urgent request out of the blue, be suspicious.

- “Something Feels Off”: If you have a general sense that something “feels off” about the interaction—the person seems distracted, the situation is unusually tense, or the request is simply out of character for the individual or context—listen to that gut feeling. It could be your brain subconsciously picking up subtle cues that you haven’t consciously processed yet.

Your Shield Against Deepfakes: Practical Protection Strategies

Don’t despair! While deepfakes are a serious and evolving threat, there are very practical, empowering steps you can take to defend yourself and your business.

For Individuals: Protecting Your Personal Privacy

- Think Before You Share: Every photo, video, or audio clip you share online—especially publicly—can be used by malicious actors to train deepfake models. Be cautious about the amount and quality of personal media you make publicly available. Less data equals fewer training opportunities for scammers.

- Tighten Privacy Settings: Maximize privacy settings on all your social media platforms, messaging apps, and online accounts. Limit who can see your posts, photos, and personal information. Review these settings regularly.

- Multi-Factor Authentication (MFA): This is absolutely crucial. Even if a deepfake somehow tricks someone into giving up initial credentials, MFA adds a vital second layer of defense. It requires a second form of verification (like a code from your phone or a biometric scan) that a deepfake cannot easily mimic or steal. Enable MFA wherever it’s offered.

- Strong, Unique Passwords: This is standard advice, but always relevant and foundational. Use a robust password manager to create and securely store strong, unique passwords for every single account. Never reuse passwords.

- Stay Skeptical: Cultivate a healthy habit of questioning unexpected or unusual requests, even if they seem to come from trusted contacts or familiar sources. Verify, verify, verify.

For Small Businesses: Building a Deepfake Defense

Small businesses are often targeted because they might have fewer dedicated IT security resources than larger corporations. But you can still build a robust and effective defense with a proactive approach!

- Employee Training & Awareness: This is your absolute frontline defense. Conduct regular, engaging training sessions to educate employees about deepfakes, their various risks, and how to spot the red flags. Foster a culture of skepticism and verification where it’s not just okay, but actively encouraged, to question unusual requests or communications.

- Robust Verification Protocols: This is arguably the most critical step for safeguarding financial and data security.

- Mandatory Two-Step Verification for Sensitive Actions: Implement a mandatory secondary verification process for any financial transfers, data requests, or changes to accounts. This means if you get an email request, you must call back the known contact person on a pre-verified, official phone number to verbally confirm the request.

- Never Rely on a Single Channel: If a request comes via email, verify by phone. If it comes via video call, verify via text or a separate, independent call. Always use an established, separate communication channel that the deepfake attacker cannot control.

- Clear Financial & Data Access Procedures: Establish and rigorously enforce strict internal policies for approving financial transactions and accessing sensitive data. Everyone should know the process and follow it without exception. This helps protect your internal network by standardizing communications and eliminating loopholes.

- Keep Software Updated: Regularly update all operating systems, applications, and security software. These updates often include critical security patches that protect against vulnerabilities deepfake-enabled malware might try to exploit.

- Consider Deepfake Detection Tools (As a Supplement): While human vigilance and strong protocols are paramount, especially for small businesses without dedicated IT security teams, be aware that AI-powered deepfake detection software exists. These can be a supplementary layer for larger organizations, but for most small businesses, they are not a replacement for strong human processes and awareness.

- Develop an Incident Response Plan: Have a simple, clear plan in place. What do you do if a deepfake attack is suspected or confirmed? Who do you contact internally? How do you contain the threat? How do you communicate with affected parties and law enforcement? Knowing these steps beforehand can save crucial time and minimize damage.

What to Do If You Suspect a Deepfake Attack

Immediate and decisive action is key to mitigating damage:

- Do NOT act on the request: This is the first and most crucial step. Do nothing further, make no transfers, and share no information until you’ve independently verified the request.

- Verify Independently: Reach out to the supposed sender through a different, known communication channel. If they emailed, call their official number (don’t use a number provided in the suspicious email). If they called, send a separate text or email to a known, established address.

- Report It: Inform your IT department or your designated security contact immediately. Report it to the platform where it occurred (e.g., email provider, social media platform). Consider reporting to relevant authorities or law enforcement if it involves financial fraud or significant identity theft.

- Seek Expert Advice: If financial losses, data breaches, or significant reputational damage have occurred, consult with cybersecurity or legal experts immediately to understand your next steps and potential recourse.

AI deepfakes are a serious, evolving threat that demands our constant vigilance and proactive defense. They challenge our fundamental perceptions of truth and trust in the digital world. But with increased awareness, practical steps, and a commitment to robust verification, individuals and small businesses like yours can significantly reduce your risk and protect your assets. By understanding the threat, learning how to spot the red flags, and implementing strong, layered security protocols, you empower yourself and your team to navigate this complex and dangerous landscape.

Protect your digital life and business today! Implement multi-factor authentication everywhere possible, educate your team, and download our free Deepfake Defense Checklist for an actionable guide to securing your communications and assets.